Apollo-1:

The Neuro-Symbolic Foundation Model that Solves Task-Oriented Conversational AI

The Neuro-Symbolic Foundation Model that Solves Task-Oriented Conversational AI

Apollo-1 Agents

Complete steerability, unparalleled control

Transformer-based LLMsdon't make good agents

Traditional language models rely on transformer architectures, which excel at pattern recognition and language generation. They are particularly effective for a variety of tasks such as text generation, translation, and summarization.

However — when it comes to agentic use cases, situations where the model needs to perform actions, make decisions, or interact with tools — transformer-based LLMs face significant challenges.

that’s where

Apollo-1 comes in

Transparency

A transparent, white-box model

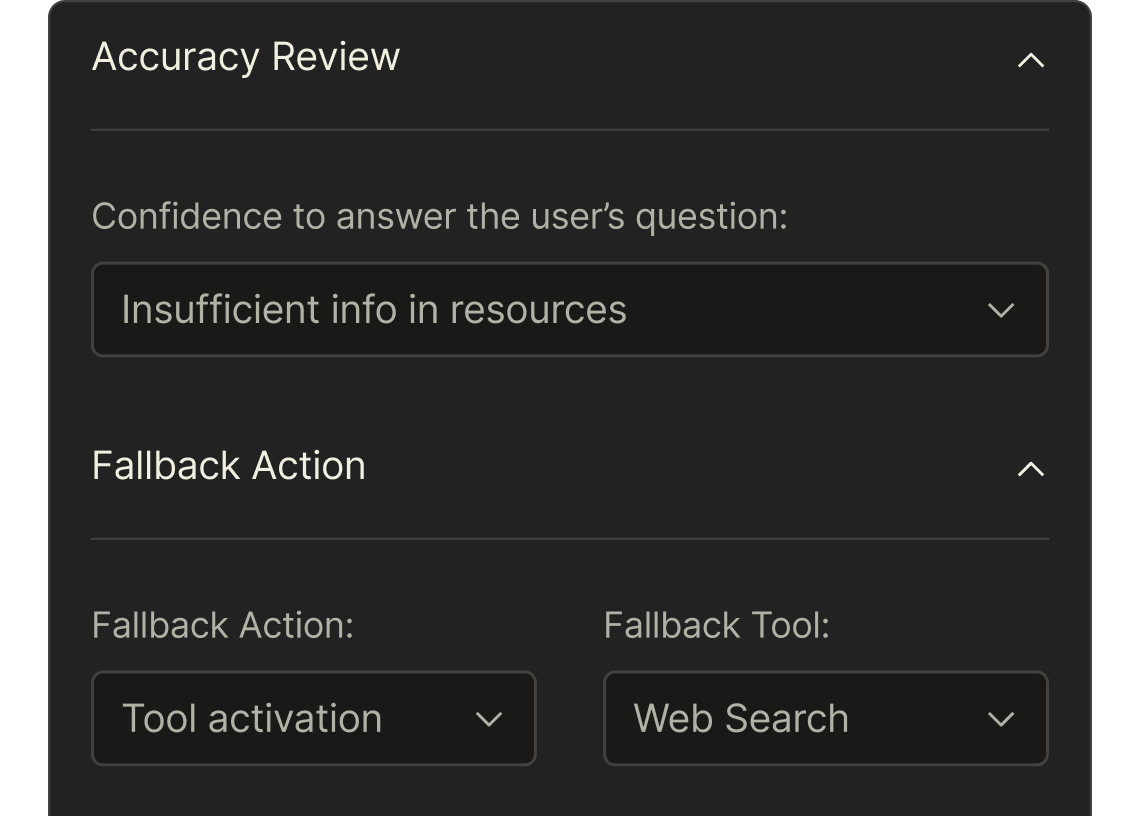

Controllability

An advanced instruction environment

Predictability

A structured reasoning process

Tool-use

100% successful tool-use

Fine-tuning

Fine-tuning via human feedback

Better suited for agents

The neuro-symbolic approach bridges the gap between the flexibility of neural networks and the precision of symbolic logic. By merging neural networks with symbolic reasoning, Apollo-1 offers several key advantages.

Symbolic components reduce the need for extensive datasets. By leveraging existing knowledge bases and rules, Apollo-1 performs effectively even with limited training data.

A higher level of understanding & precision

At the heart of Apollo-1’s capabilities lies the Structured Interaction State. This state is a symbolic, parameterized representation of each interaction, created by collecting and organizing sensory data.

By converting unstructured inputs into a structured format, Apollo-1 achieves a higher level of understanding and precision in its responses.

a new level of safety & accuracy

Combining generative and rule-based approaches minimizes the risks typically associated with AI outputs.

Adheres to predefined rules to avoid generating inappropriate or harmful content.